Facial animation is the magic that brings inanimate characters to life. It involves animating various parts of the face to create facial expressions.

Facial animation is used extensively in the animation industry. It is the technique that enables intricate emotions to be conveyed by characters and dialogues to be synced with their lips and mouths. Without it, the feature animation industry wouldn’t be able to create as extensive stories and detailed character interactions as live-action films.

In this guide, we will explore what facial animation is, its core techniques, and how we can efficiently implement it in our projects.

Need Animation Services?

Visit our Animation Service page to see how we can help bring your ideas to life!

What is Facial Animation?

How do animated characters convey emotions as vividly as real actors? Facial animation is the answer!

Facial animation is the art and science of animating a character’s face to express emotions, sync dialogue, and bring stories to life. It enables nuanced facial expressions like a smooth grin or a furrowed brow, making characters relatable in films, games, and virtual worlds.

Facial animation is an essential part for 3D Animation, 2D Animation, and emerging fields like VR and AR, since it is one of the fundamental bricks of driving immersive storytelling.

From blockbuster movies to mobile apps, it’s a cornerstone of character animation, blending creativity with technical precision.

The Core Techniques in Facial Animation

Facial animation creates expressive movements for characters by exploiting specialized techniques for 2D and 3D workflows.

Each of these methods requires a deep understanding of emotion mapping to animate facial expressions.

The following techniques are used in the animation pipeline:

- Facial Rigging in 3D Animation: Artists add digital bones to facial features like eyes, lips, and eyebrows, creating manipulation handles.

These allow precise sculpting of facial expressions, such as a raised eyebrow or clenched jaw. - Blendshapes for Smooth Transitions: Predefined vertex positions morph facial geometry, like opening a mouth. Sliders interpolate between states, often combined with rigs for efficiency.

- 2D Animation Shape Libraries: Artists draw multiple mouth and eye shapes for lip sync animation. Each shape matches phonemes (like “oo” or “ah”) or emotions (such as wide eyes for shock).

- Hybrid Approaches: Combining 2D assets with motion graphics adds dynamic effects, common in web-based character animation.

In 3D Animation, rigging demands knowledge of facial anatomy to ensure natural deformations.

For example, a smile must stretch skin realistically without distorting textures. Blendshapes simplify this by automating transitions, saving time on complex scenes.

In 2D Animation, pre-drawn shapes require meticulous planning to sync with dialogue, especially for long sequences. Both approaches prioritize authentic facial expressions, ensuring characters feel alive and relatable in CGI productions.

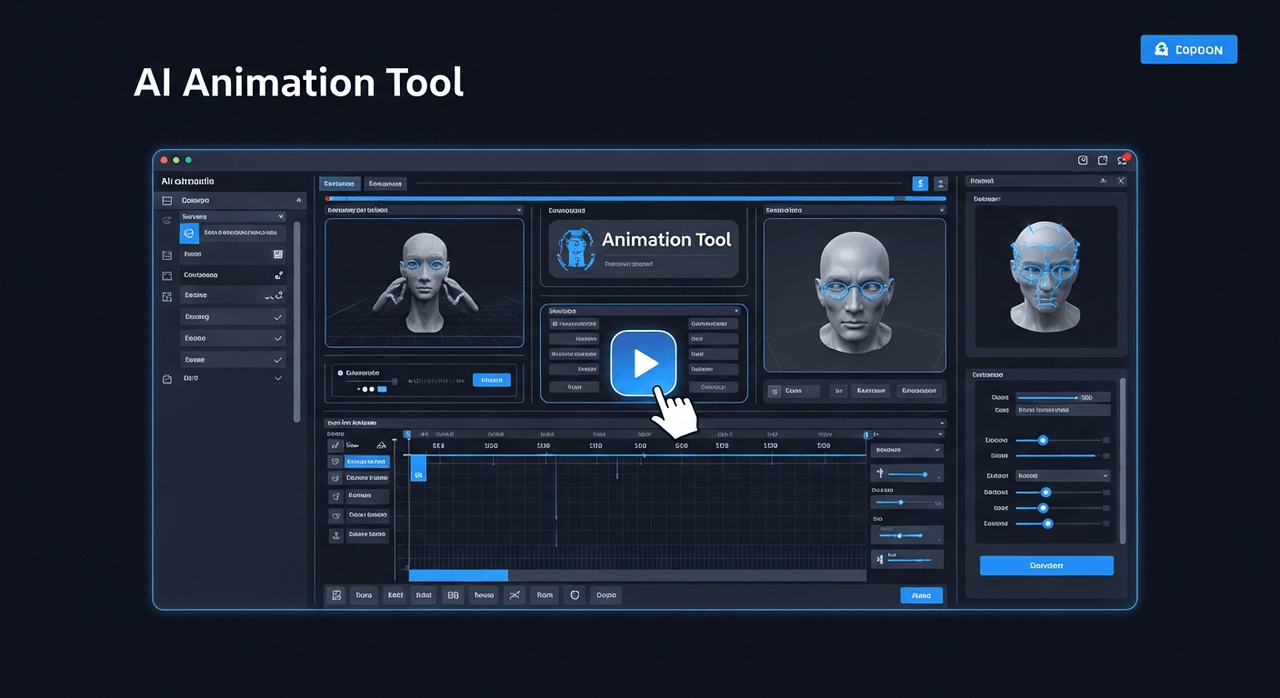

Tools For Facial Animation

Facial animation is often created using 3D or 2D animation software.

- For 3D characters, Maya, Blender, 3ds Max, and Cinema 4D are the primary software used to animate faces.

These programs are full-featured 3D animation tools that allow artists to create characters through a process called modeling and apply bones to them in a process we mentioned earlier as rigging. - 2D facial animation is created using various 2D animation and motion graphics software like Toon Boom Harmony, Adobe Animate, and Adobe After Effects.

Creating 2D lip syncing is relatively easier with these programs. Most 2D computer animations created today are cut-out animations created for web or TV series that do not require highly accurate facial or mouth movements.

2D animation programs come with tools that can automate lip syncing. Although not as accurate as manually assigning mouth shapes for each corresponding sound, automated lip syncing saves animators a lot of time.

Challenges of Facial Animation

Animating the human face is a daunting task due to its intricate anatomy and emotional range.

The complexity of muscles, bones, and soft tissues poses significant hurdles in both 2D and 3D workflows.

Most artists have come in contact with the following common obstacles:

- Anatomical Precision: In 3D Animation, skin must deform naturally around joints, avoiding unnatural stretches or artifacts.

- Emotional Nuance: Capturing subtle emotion mapping, like a fleeting smirk, requires balancing technical and artistic skills.

- 2D Asset Management: Swapping dozens of mouth and eye shapes for lip sync animation is labor-intensive, especially for long dialogues.

- Resource Intensity: Both 2D and 3D processes demand significant time, skilled animators, and rigorous quality checks.

In 3D Animation, errors like stiff lip curls or misaligned textures can break immersion, requiring expertise in facial rigging and texturing.

2D Animation faces scalability issues, as artists must create extensive shape libraries for varied expressions.

You can read our facial animation tips to gain an advantage over the upcoming challenges!

Facial Motion Capture

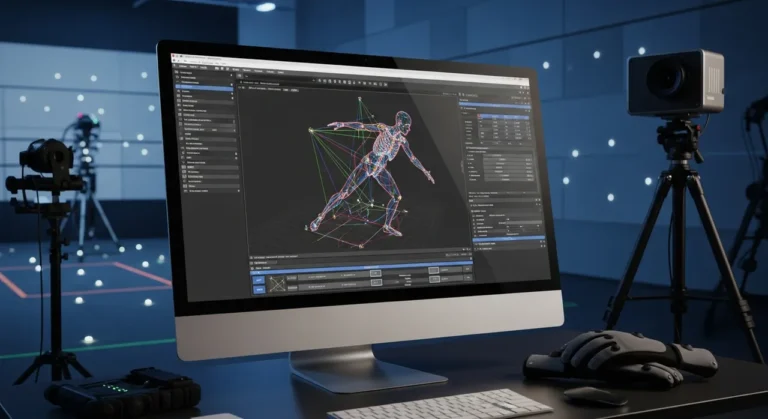

Motion Capture (MoCap) revolutionizes facial animation by recording real human expressions for digital characters.

Key aspects of Facial MoCap include:

- Performance Capture: Actors wear markers or headsets to track facial landmarks, capturing micro-expressions like a lip quiver.

- Real-World Applications: Used in films like Avatar: The Way of Water and games like The Last of Us Part II for lifelike facial expressions.

- Advanced Systems: Vicon and OptiTrack systems record high-fidelity data, integrated with Unreal Engine for real-time previews.

- Data Cleanup: Raw MoCap data requires refinement to ensure natural movements in the final CGI output.

While efficient, MoCap setups are costly, requiring specialized studios and technicians.

Post-processing ensures expressions align with character designs, maintaining authenticity.

Real-Time Facial Animation

Real-time animation transforms facial animation into an interactive tool, powering immersive experiences in VR, AR, and games. It enables instant expression mapping for dynamic storytelling.

Key applications of real-time facial animation include:

- VR Facial Tracking: Meta Quest headsets use cameras to capture facial expressions, animating avatars in platforms like VRChat.

- AR Filters: Snapchat and Instagram use facial landmarks to apply animated effects, like glowing makeup, popular among younger users.

- Game Cutscenes: Unreal Engine’s MetaHuman powers real-time facial expressions in Hellblade II, enhancing narrative depth.

- Mobile Accessibility: Front-facing smartphone cameras enable facial tracking for AR apps, broadening access.

In VR, a user’s smile instantly mirrors on their avatar, creating authentic social interactions. Whereas AR filters, like Snapchat’s puppy ears, rely on real-time facial landmarks to track movements accurately.

In game development, MetaHuman allows developers to animate complex cutscenes without extensive rigging.

However, real-time systems demand optimization to run smoothly on consumer hardware, like consoles or mobiles.

Facial Animation in Games

Facial animation is less common in game development due to its complexity, but it’s gaining popularity in story-driven titles.

Games prioritize mechanics, yet facial animation enhances narrative immersion.

Key trends in game-related facial animation include:

- Cinematic Cutscenes: AAA titles like Cyberpunk 2077 use facial expressions for emotional storytelling.

- Efficient Techniques: Blendshapes and automated tools like iClone minimize animation time for tight schedules.

- Real-Time Integration: Star Citizen uses facial tracking for dynamic multiplayer interactions.

- AI-Driven NPCs: AI predicts expressions for non-player characters, enhancing realism in open-world games.

Unreal Engine’s MetaHuman provides pre-rigged faces, enabling indie developers to create cinematic-quality character animation. For example, Hellblade II leverages MetaHuman for real-time cutscenes that rival film visuals.

AI and Machine Learning in Facial Animation

Machine Learning is redefining how to animate faces, automating complex tasks in both 2D and 3D animation.

AI-driven tools are making facial animation faster and more accessible.

Key AI advancements in facial animation include:

- Trained Models: AI systems, trained on vast datasets, predict vertex placements for facial expressions, like a furrowed brow.

- Automated Lip Sync: Tools like iClone and MetaHuman use AI for seamless lip sync animation, reducing manual work.

- Realistic VFX: Ziva Dynamics’ AI simulates skin dynamics, used in Dune: Part Two for hyper-realistic faces.

- Generative AI in 2D: Runway and Midjourney generate facial expressions animation frame-by-frame, though frame consistency remains a challenge.

- Deepfake Technology: AI creates near-authentic facial animations, raising ethical concerns in CGI media.

AI models streamline facial rigging by predicting muscle movements, as seen in MetaHuman’s pre-rigged models.

Generative AI excels in 2D Animation, producing detailed sequences, but struggles with continuity across frames. Deepfake Technology, while innovative, prompts debates over authenticity and misuse in films.

Final Words

Facial animation is an evolving field that is constantly changing with new inventions and better workflows. The advancement of recording and tracking hardware, as well as software innovation has made its workflow more approachable, However, it still remains one of the most laborious and specialized tasks in animation. It is one of those animation areas where skills alone are not enough to create wonderful results. Even when skillful artists are available, the time-consuming nature of facial animation has forced innovators to look for innovations that could accelerate the workflow. For a long time, using expensive motion capture suits, cameras, and complex software have been the most reasonable way of creating high-quality facial animation. However, in recent years, with the advancements in AI and tracking tools, creating facial animation has become easier. Time will show us how these advances can lead to better workflows and allow more people to create facial animations.