3D modeling process is a multi-step process that brings characters, objects, and environments to life. From video games to animations, the quality of the final 3D model depends heavily on the care and technical finesse applied across numerous modeling stages. The process begins with gathering visual references and ends with the final rendering. In this article, we will provide key insights into the craft behind 3D modeling. It also outlines the necessary steps in 3D modeling production to balance visual quality with practical runtime considerations.

Need Game Art Services?

Visit our Game Art Service page to see how we can help bring your ideas to life!

1. Concept and Reference Gathering

In order to define the vision and purpose of the 3D modeling services, we need concept art services. This stage allows us to define the parameters like art style, level of realism, materials, etc. With an early guideline from concept artists, we can set our 3D modeling workflow and set clear goals. Model sheets are delivered after the final concept for a better modeling guideline.

Having relevant references is critical. For character modeling services, anatomical references ensure proper body proportions. For environments, collecting architectural plans or maps of actual places provides modeling dimensions. They help modelers develop an intuitive sense of natural shapes and forms.

2. Blockout

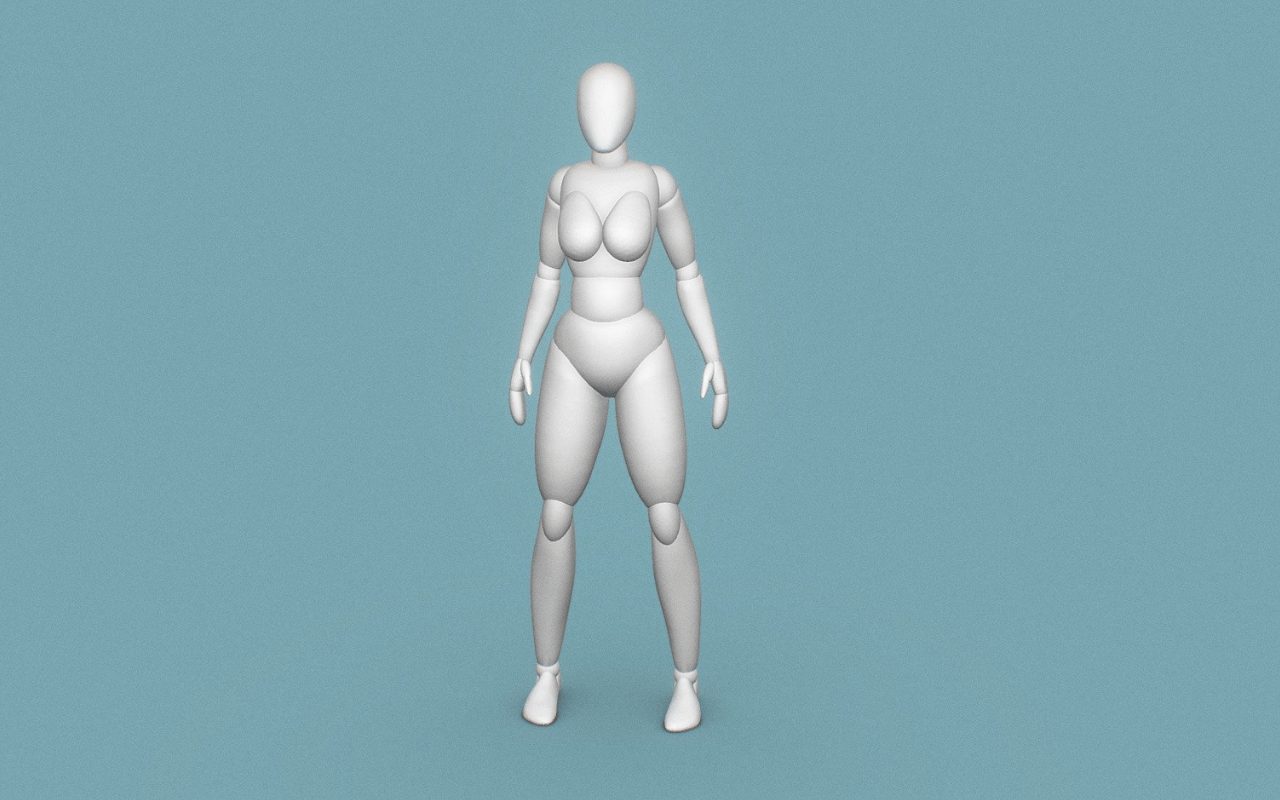

In the blockout stage, we use simple shapes rather than nitty-gritty details to define the major mass distribution, silhouettes, proportions, and overall scale. For example, a character blockout will show the head size relative to the body, limb lengths, etc. This stage allows us to quickly iterate over layout concepts before investing effort into details. In blockout, standard primitive shapes like cubes, spheres, and cylinders are commonly used in order to make adding finer details much easier in subsequent modeling stages.

3. Basic Model Shape

The basic model shape stage bridges the gap between blockout and final surface modeling. More defined forms and proportions are established but keeping surfaces simple at first. Basic anatomical landmarks are laid out for characters based on references. This includes the skull, rib cage, hip bones, and limb proportions. For hard surface modeling, changes in surface direction and major functional sub-parts are blocked.

This stage allows evaluating silhouettes and proportions from primary viewing angles without over-committing time to a particular pose. For vehicles or buildings, the major grouped components are created to assess overall balance.

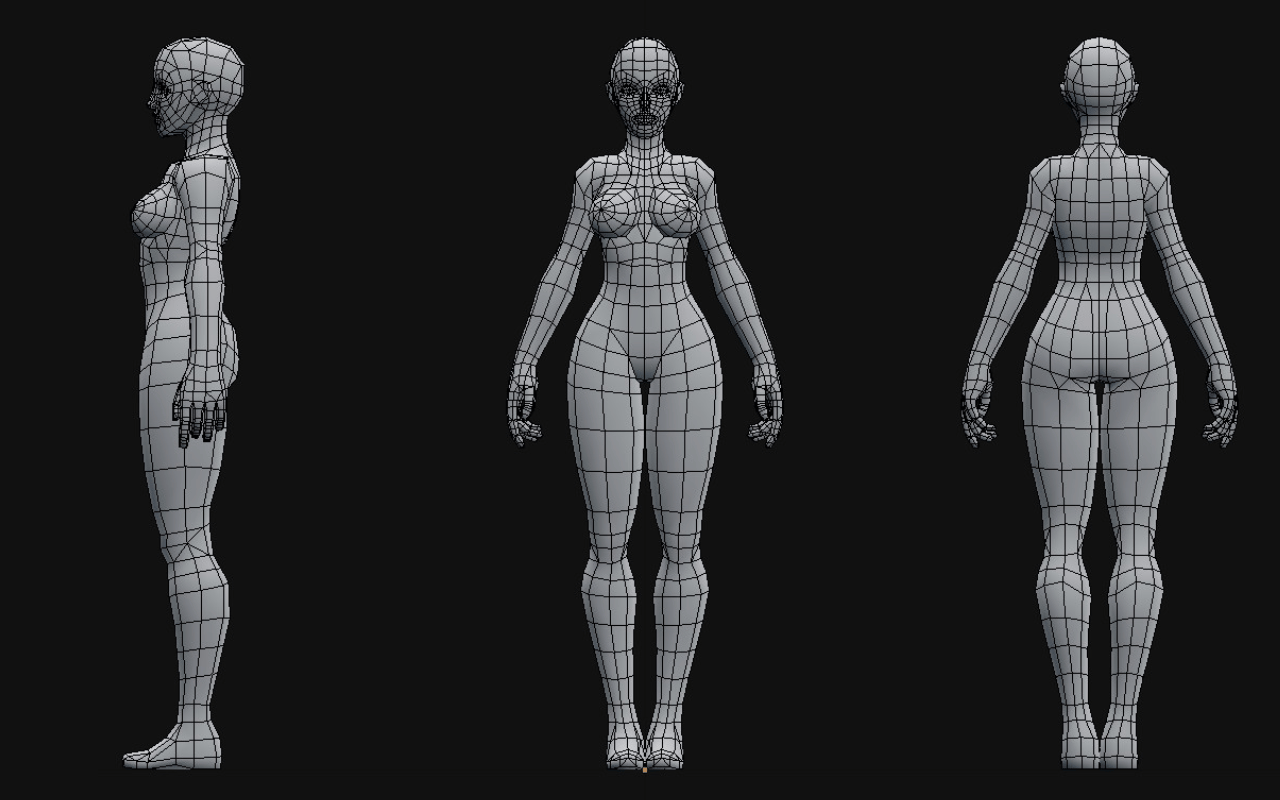

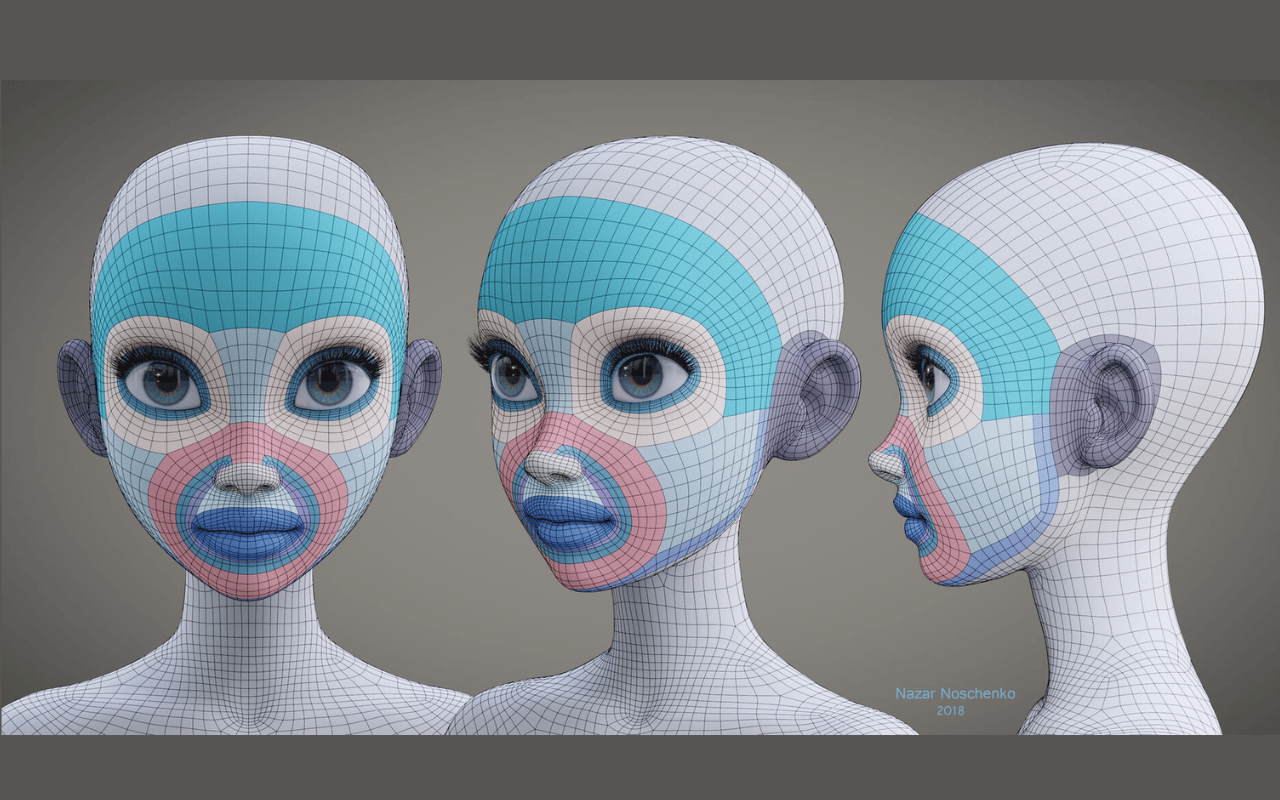

4. Low-Poly Modeling

The low polygon modeling stage creates a base mesh optimized for animation and posing. Edge flow is cleaned up to deform well when articulated. Key areas needing more deformation, such as joints and areas around morph targets, have increased mesh density. Regions needing less detail are reduced in complexity. Triangles and n-gons are converted to quads. Mesh topology is organized, so subsurface smoothing modifiers give good results. Poly count budgets optimized for target hardware platforms are maintained from the start. Careful low poly modeling enhances efficiency for downstream sculpting, texture baking, and rigging stages. This 3D modeling technique balances maximizing geometry usage against animation requirements.

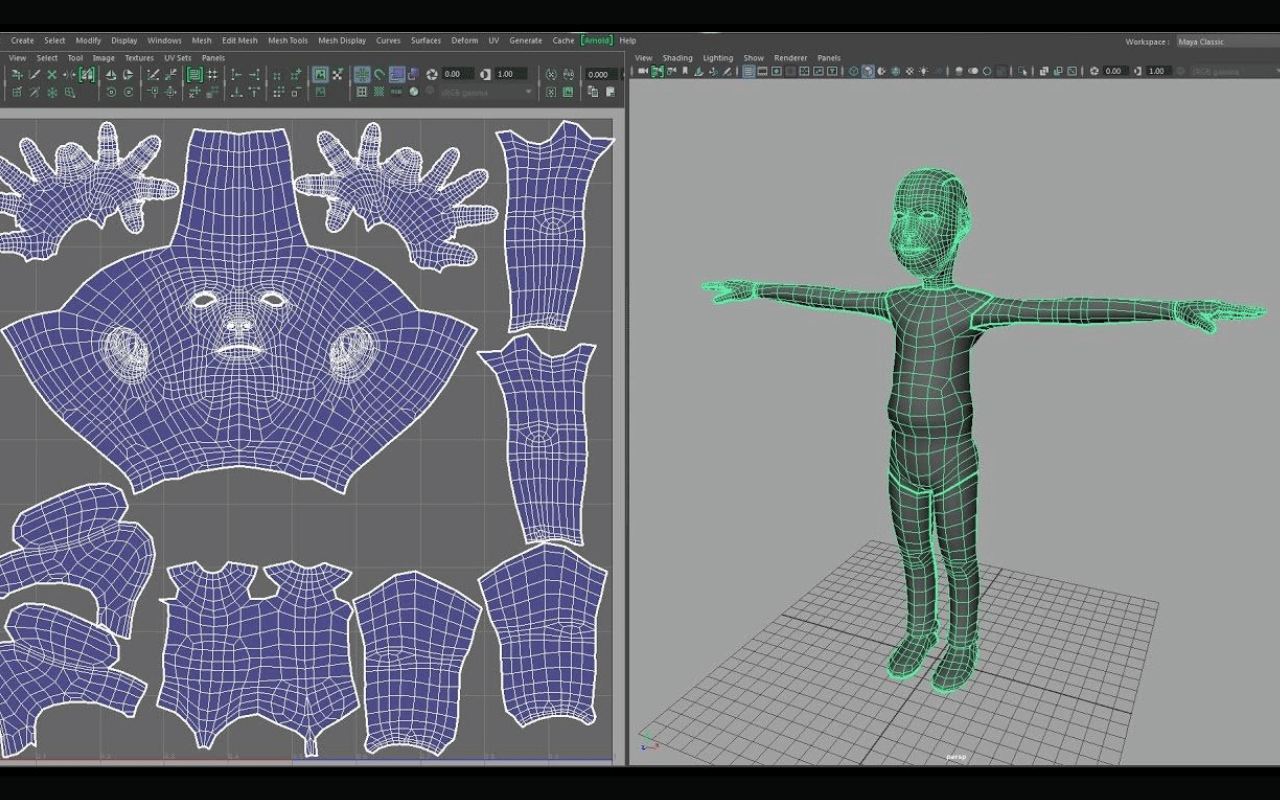

5. UV Mapping

UV mapping is the process of projecting 3D mesh geometry onto a 2D texture space, and it bridges important connections between 3D geometry and 2D painting workflows through different 3D modeling software. It defines how 2D texture maps will be mapped onto the 3D asset.

For organic models, the UV shells are carefully laid out to maximize space for painting complex materials later. Mirroring UVs reduces painting work for symmetric body parts. For hard surface models, UV shells match logical surface regions and repetitions. This makes painting less cumbersome by avoiding disjointed texture spaces.

6. Sculpting

The 3D sculpting stage adds high-frequency surface details, skin textures, and finer shapes to the underlying base model. Specialized digital sculpting tools displace and build up millions of micro-polygons upon the lower-resolution model. Combining specialized brushes, alphas, and positioning methods mimics real-world sculpting workflows.

This high polygon stage pushes forms, shapes, and personalities to their final state before optimizing topology and baking assembled details down to lower-resolution game assets.

Read More: 3D Modeling vs. Sculpting

7. Retopology

3D Retopology services refer to the process of rebuilding a clean quad-based mesh on top of a high-resolution sculpt. While sculpts capture extremely nuanced details, the resulting geometry is often uneven and too dense for animation. Retopology generates an optimized base mesh to receive the baked normal/ambient occlusion details from the sculpt. The goal of this stage is to create the most efficient quad layout to deform predictably when posed and animated while retaining sculpted high-frequency elements on displacement and normal maps.

8. Baking High-Poly to Low-Poly

Baking is the process of transferring surface detail from a high polygon sculpt model onto an optimized low polygon game or animation asset. Details encoded in normal, ambient occlusion or height maps retain all the high-res sculpt information without the heavy memory costs. The low polygon model shares an identical topology and orientation as the sculpted version and sits precisely over it. Special baking tools calculate lighting shading differentials between the low and high models to store as texture maps. Baking retains 100% of painstaking sculpting work as reusable runtime textures.

9. Texturing

3D texturing refers to the process of applying 2D bitmap images, referred to as texture maps, to the surface of 3D assets. Textures define the diffuse color, patterns, roughness, normals, and other surface qualities. The textures are painted to match the required material properties and realistic goals. Texturing focuses on both pixel level precision as well as interactive flexibility for downstream users. Advanced tools enable direct texture painting in world space for precise wear based on actual geometry contact.

10. Rendering in 3D Modeling Process

The rendering process generates 2D pixel images from the textures, lighting, and materials defined for 3D scenes and assets. Highly complex software simulations approximate the behavior of light in the real world based on physics models. Light transport calculations determine the visibility, reflection, refraction, and scattering of light rays as they interact with different surfaces. The rendering process brings together all built and configured visual elements in a scene and uses unbiased physics based simulation to generate photorealistic images matching real camera behavior.

About the 3D Modeling Process

The market for 3D design is a hot one these days due to the popularity of 3D animations and 3D games. Every object or character you see in 3D animations is made of a 3D mesh. The process of making these 3D meshes is called 3D modeling. These 3D objects are based on real objects or other 3D designs, and they’re involved in the creation of the 3D environment.

The whole process of 3D modeling starts off with designing each object in detail. This primary design can be in the form of drawings or sculptures. Finally, these detailed designs will be recreated in the 3D environment. These 3D models need to be functional and properly fit into the production pipeline. 3D animation is all about movement, and accordingly, these 3D models need to portray movement in the best way possible. That’s why 3D models should be optimized for a wide range of deformities.

Pre-Modeling Considerations

Let’s get some parameters set before we actually model anything. These early decisions will drive everything from the amount of polygons to the final visual look. A thought-out pre-production phase saves time and money in revisions later on.

Establishing Visual Objectives and Technical Limits

Every project has a specific visual identity, and the modeling process needs to achieve it from the get-go. Are you aiming for realism or stylization? Is the style space-age, cartoony, or gritty? These goals not only determine what the outcome will look like but also how you approach form, detail, and surface treatment. Technically, you’ll need to be aware of limitations such as shader compatibility, texture sizes, and polygon budgets based on what engine you’re working with. Balancing artistic vision and technical capability is the primary consideration at this stage.

Aligning with Target Platform Requirements (Game, Animation, VR)

The platform your asset is for has a huge bearing. Real-time environments like mobile games or VR need skinny models with clean topology and solid textures. Animation for the film or cinematics, on the other hand, can be more complex and detailed since performance isn’t restricted by hardware demands. Knowing the target platform enables you to make informed decisions in terms of geometry density, resolution, and even rigging complexity.

Collaborating with Concept Artists and Art Directors

Modelers are not working in a vacuum. Regular communication with art directors and concept artists ensures that the end 3D model appears as good as, if not better than, the original idea. Concept art is the master plan, and regular feedback enables proportions, shapes, and surface detail to be adjusted where needed. This process also keeps the overall artistic direction throughout all assets of a project. Good communication here avoids costly change later on.

Now let’s take a look at the actual stages of 3D modeling!

Post-Modeling Revisions

Once the model is finished and detailed, it doesn’t just go live. It usually goes through several cycles of testing, feedback, and refinement—especially in game development or animation production where functionality is as important as appearance.

Gathering Feedback from Leads or Clients

After a model is complete, it’s important to present it before team leaders, art directors, or customers to inspect. Their feedback may involve altering proportions, silhouette, material accuracy, or even usability such as joint distortion. Sometimes feedback comes from testers or other fields such as rigging or lighting. Keeping this feedback seriously improves the model and brings it closer to production-ready.

Iterating Based on Gameplay or Animation Integration

As soon as the model is put into a game engine or animation pipeline, however, something goes astray. Perhaps armor clips with character walk, or perhaps a prop doesn’t quite sit well in a hand with an idle pose. All of these tend to throw off a chain of rapid fixes or even broader overhauls. This integration stage is where technical accuracy and artistic accuracy truly meet, and it’s normal to make adjustments to mesh density, pivot points, or UVs at this point.

Version Control and File Organization

Version control of different versions of a model is a key component of an effective workflow. Working independently or as a very large team, naming schemes and version control tools (like Git, Perforce, or even a smart folder system) avoid any lost or overwritten work. Organized files are also useful for returning to assets after months for revising or repurposing them for other projects.

Common Problems with the 3D Modeling Process

Even professional modelers experience issues during the process. Issues can affect the look, performance, and usability of an asset. It is helpful to be aware of them beforehand in order to avoid such problems later.

Balancing Detail with Performance

Perhaps the hardest part of modeling for real-time rendering is knowing when to quit. While adding detail makes things look better, too much geometry or large textures can make the system slower, especially on lower-end hardware. The trick is to create the illusion of detail by clever modeling, texturing, and baking. It is sometimes more efficient to build surface data with normal maps or shaders rather than geometry.

Avoiding Topology Mistakes

Good topology is not just pretty to look at—it makes a significant difference in all aspects from animation to rendering. Bad edge flow can produce ungainly deform when moving, artifacts when lit, or unwanted geometry in static models. A common mistake is to retain triangles or n-gons in areas that need to deform, or failing to use loops through joints. Fixing topology issues upfront saves a lot of time rigging and skinning.

Dealing with Texture Seams and UV Stretching

UV mapping, as well, poses its own headaches, especially when it comes to organic models or complex curves. Seams will appear if they are not given the correct placement or blending when texturing. Stretching will warp patterns and break the illusion of realism. UVs need to be unwrapped so that the texel density is distributed evenly and seams are covered in less conspicuous places, like under the arm or on sharp edges. Sufficiently packing UVs and verifying with checker textures prevents these common errors.

Conclusion

Each stage of the process makes important tradeoffs between visual quality, functionality, and performance. Modelers have to work smart to retain high geometric and texture details from sculpts without overloading game engines. UV layouts created early in modeling connect the 3D and 2D stages down the pipeline. Retopology redistributes the mesh based on animation, deformation, and runtime considerations. With so many interdependent steps, clear communication and iteration are crucial to aligning artistic goals with practical constraints.